WHAT is DQ

- the definition

Data is a fact (latin: factum, greek: πράγματα – created). Fact is a social agreement on an idea, as a reference to other (subjective) ideas. Data / facts have validity and call for verification.

Quality in neutral sense is a collection of traits & properties that disguise beings & things (states and processes). This neutral type of quality definition anticipates the question of What is DQ. Quality in evaluative sense is raised by Why DQ.

Next sections will exemplify What is DQ and emphasize which DQ components (capabilities + sources) have crucial impact on business success. The final section will expose the key message of DQ efforts and confirm the definition.

WHAT is DQ

– the integrit•ization

The traditional approach is limited to run DQ checks by verification and validation of data, preferably through automation.

Validations and verifications are managed by rules in advanced data landscapes.

Integritization is equivalent to verification.

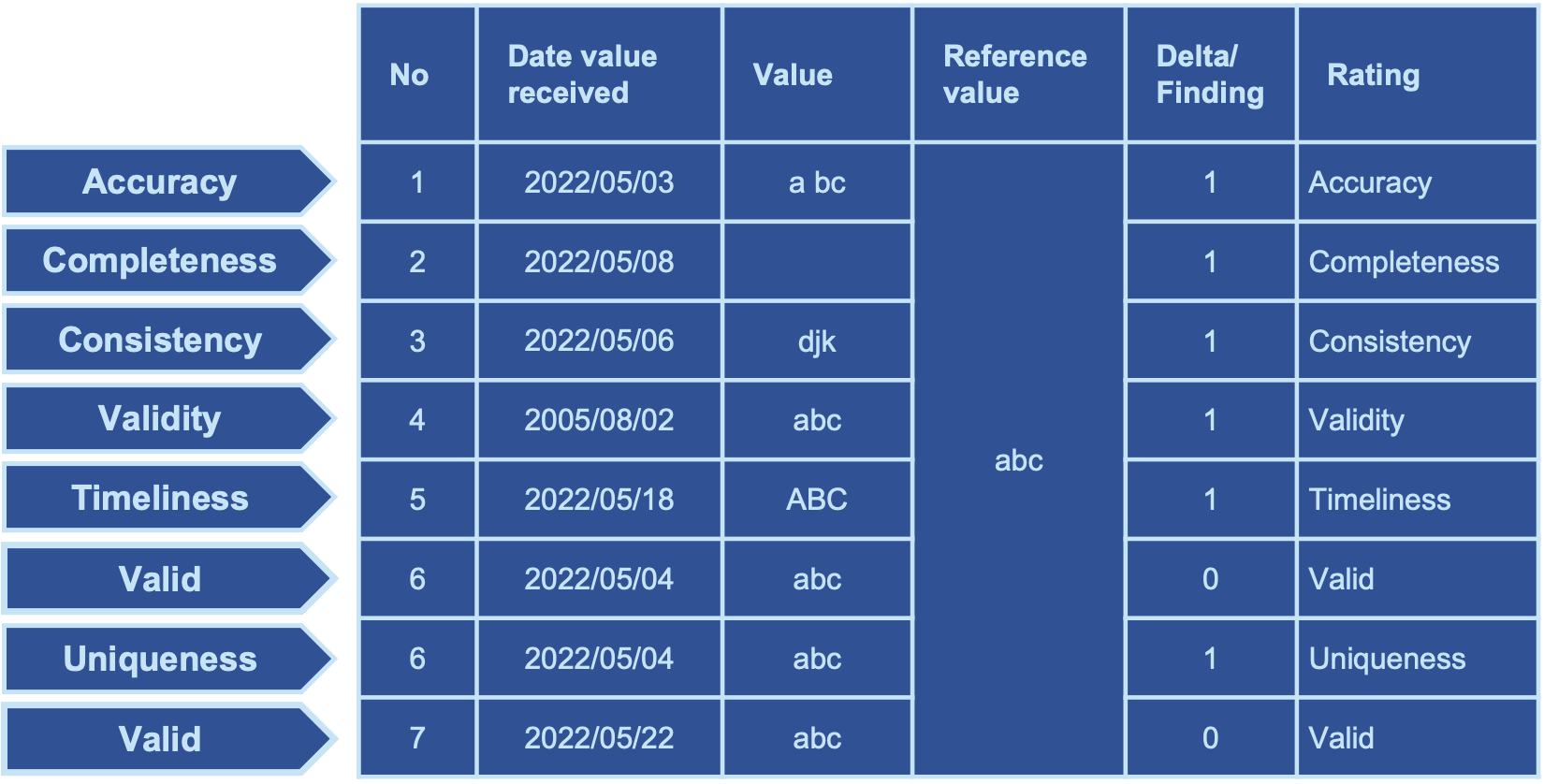

Data sanity is indicated by a set of dimensions on data integrity. These dimensions act as principles for the health of data.

Integrity dimensions act as principles and disguise business data with attributes, called meta data.

The principles that serve to validate & verify data, are shaping trust in decision making and credibility in data suppliers.

Data is called invalid, biased, or a finding when it has breached the integrity verification rule(s).

Table below visualizes violations of integrity for each dimension.

Know-how:

"Identify data health through integrity checks. Sketch and share data health situation with a heat map."

WHAT is DQ

– the utilitarian•ization

Utilitarianizations are simply attributations of data that disguise data with meta data, shaping data with characteristics like revenue, costs, sufficiency, efficiency, effectiveness, and other economic or monetary figures.

Utilitarianization is equivalent to validation.

The utilitarian principle is that each data finding becomes weighted with an economic value. Such data sanity becomes turned into findings with an economic measure (time, count, $). I call this process the utilitarianization of integrity. Some authors call this process incremental validation of data. Whatever it is called, it gives data (findings) finally a shape, a form, a face, a Gestalt.

It's not only insane data, rather integrity efforts, that can harm business goals up to total ruin. Thus, it becomes even crucial to categorize data (integrity) findings with (economic) attributes (meta data).

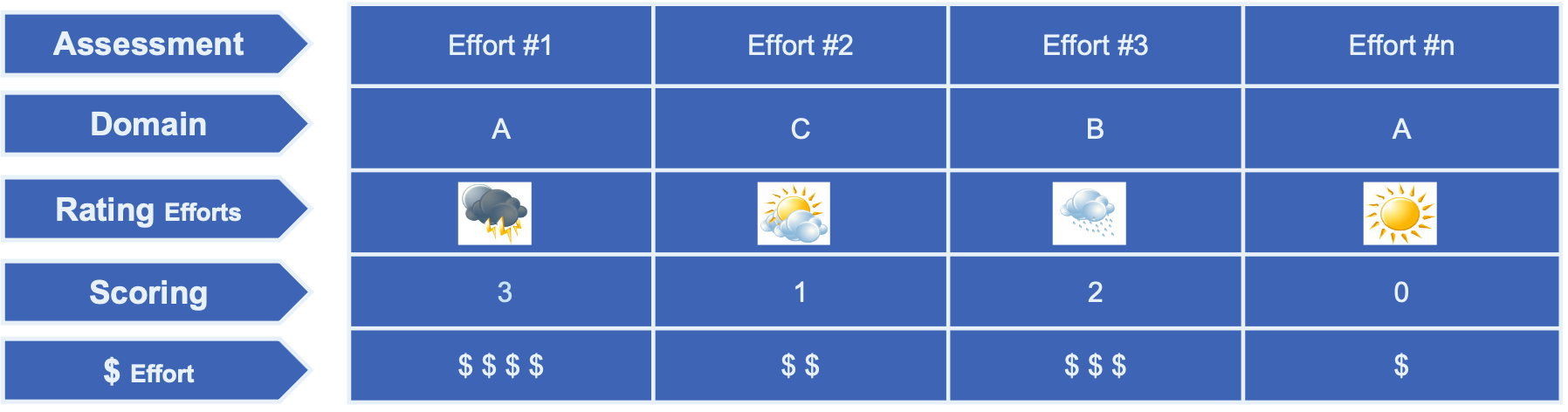

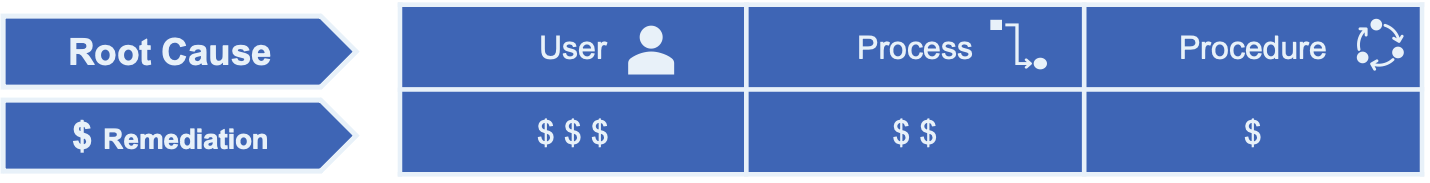

Therefore, right after the integrity checks, types of biased data should be assessed by

- Impact on business

- Effort of remediation

where biased data is conditioned and rated by

- Business impact (urgency+severity)

- Estimated effort

- Root cause

- Remediation type

These ratings allocate a balanced fit of required resources (time, scope and budget).

Such combined validation & verification of data shapes trust in decision making and credibility in data suppliers.

Unfortunately, today it is not standard to decorate findings with economic measures. Beyond, this is not managed by rules.

Know-how:

"Identify data integrity value through assessments on business impact, estimated effort, root cause, and remediation type. Determine the right dose of data integrity efforts for the future."

WHAT is DQ

– 6 Sigma DMAIC

By experience, in many cases it is sufficient, even efficient to have data compliance at low levels. This is why data-directed governance roles are inserted, assessing the right DQ proportions of scope and budget.

Thus, traditional quality checks, data team organizations, and data team spirit call for change: Validations and verifications need to be embedded into a DQ Lifecycle methodology that can assess and handle integrity processes, and condition / tag hem with sufficient and effective utilitarian / economic figures.

DMAIC is the preferred DQ Lifecycle Improvement Process that is prominently used in the SixSigma methodology. DMAIC stands for the phases Define, Measure, Analyze, Improve, and Control. DMAIC generates methods for an incremental improvement of qualities.

DMAIC uses the data quality pillars

1. Integrit•ization

2. Utilitarian•ization

DQ integrates the data governance pillars

3. Glossar•ization

4. Model•ization

5. FAIR•ization

6. Organ•ization

Glossar•ization is part of Model•ization.

Know-how: "Apply the SixSigma DMAIC Lifecycle methodology to improve your DQ incrementally"

WHAT is DQ

– real world evidence

First graph demonstrates data entry / integration, and check / verification, the second one validation of data. All are key success components that serve data scientists and labs with trustful data. The graphs impressively demonstrate volume of preparations and efforts until data becomes valid and verified for further consumptions.

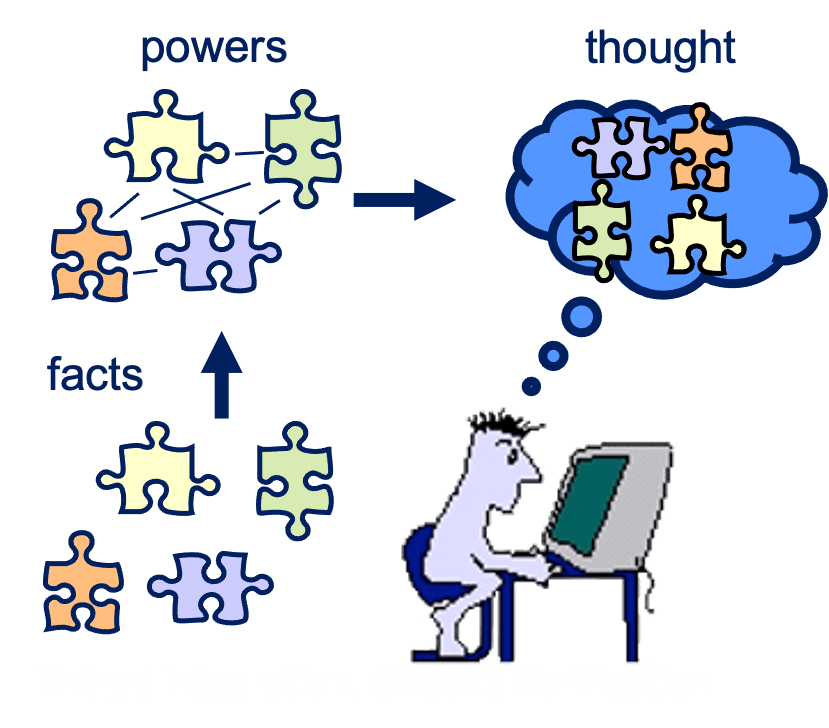

The combinations of the puzzle represent the train of thoughts - ideas. This process activates extraction, transformation, aggregation, validation, and verification techniques that are performed by Data Owners, Data Stewards and Data Advisors. All these mental and physical techniques reside in DQ as well, and they (trans)form an idea to a physical or mental unit, that can be re-used by further thoughts, as those of a data scientist. The data scientist finally creates a value through truth. Certainly, all actions become only facts when they are shared within regulated communities that follow agreed social rules.

Know-how: "Liberate Data Lab or Data Scientist from root cause analysis and prevent from fallacy"

WHAT is DQ

– practice

Weather forecasts, for instance, are complex integrations and calculations from historic and actual weather data. In turn, weather data serve for forecasting crop grow and electric power demand / supply forecast enabling decisions to regulate harvest and power production. Thereby the principles of integrity and business must validate and verify data. DQ critically impacts the value of data as an asset.

Forecasts and decisions built on high DQ levels generate contentment, trust, commitment, credibility, and sustainability.

Hence, validation and verification techniques lead to real world evidenced data and generate maximum trust in figures.

Know-how: "Approach real world through coached practice"

WHAT is DQ

– the key messages

DQ is a set of principles, standard operation procedures, work instructions or user journeys on data integrity and business rules that validate and verify data, shaping trust in decision making.

Insights and forecasts built on high DQ levels generate contentment, trust, commitment, credibility, and sustainability. This is the link from What is DQ to Why DQ.

Both key messages approach the used terms in the chapter Definition: Data are facts and quality is a disguise of beings and things.