WHAT is DG

- the definition

Data is a fact (latin: factum, greek: πράγματα – created). Fact is a social agreement on an idea, as a reference to other (subjective) ideas. Data / facts have validity and call for verification.

Governance in material sense is a grid of vocabularies that empowers human beings (data stakeholders & consumers) managing business capabilities, concepts, and terms. Vocabularies are most likely compendiously captured and physically located in business glossaries. Glossaries can be turned to catalogues, once they become enriched with lineage / taxonomies and literacy.This material type of governance definition anticipates the question of What is DG. Governance in mental sense is raised by Why DG.

Next sections will exemplify What is DG and emphasize which DG sources have crucial impact on business success. The final section will expose the key message of DG efforts and confirm the definition.

WHAT is DG

- the glossar•ization

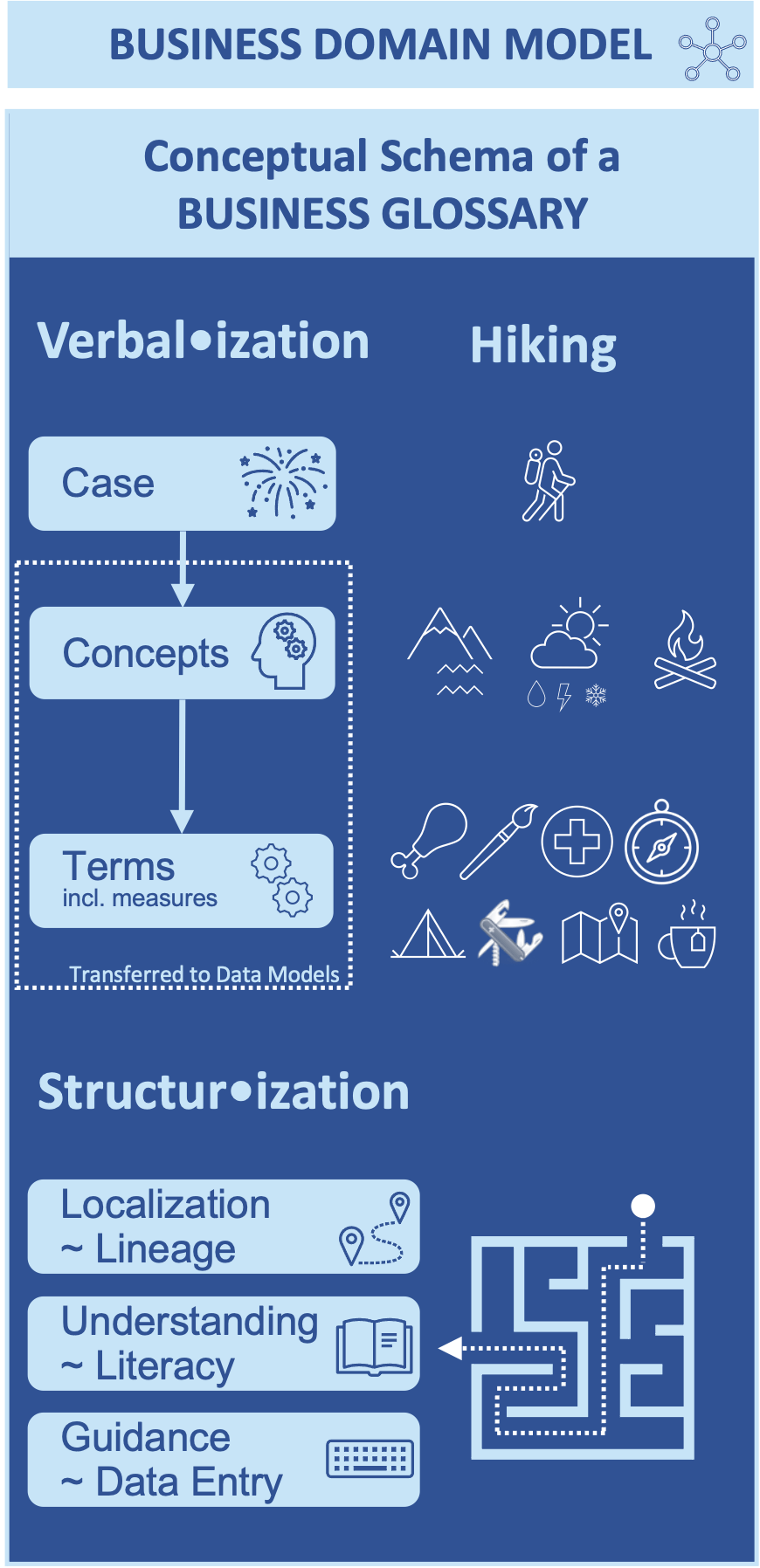

Before it comes to data modeling a business glossary is elementary to operate with the ENTITY / Ontology of vocabularies.

- In the Tractatus of Wittgenstein an ENTITY is an umbrella term for the set {case, pictures, facts with atomic facts}

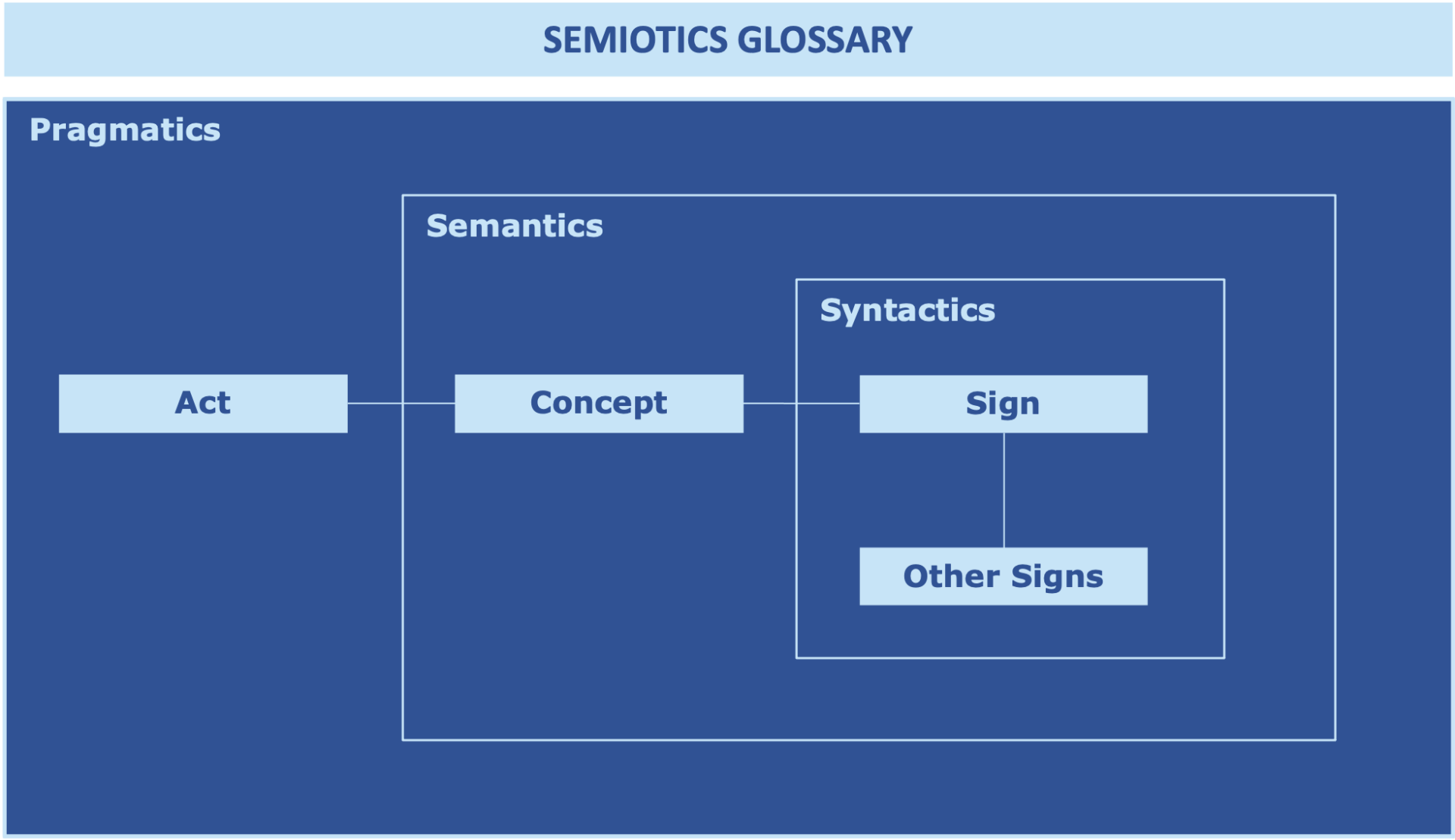

- In Semiotics of Peirce an ENTITY is an umbrella term for the set {act, concepts, signs vs. other signs} ~ {pragmatism, semantics, syntactics}

- In (business) practice ENTITY is expressed as the entity set {case, concepts, terms}

Thus, ENTITY is often used in a broad, but confusional sense. I will limit the focus on (business) practice with the ordinary vocabulary of natural language:

- Case represents a mental or physical act that generates concepts. Cases are pre•positional.

- Concepts are units of semantic. They represent a class of objects, in practice often aggregated by a row. Concepts are functions.

- Terms represent syntax / instance, columns in practice. Terms' destinations are to create incremental knowledge through ongoing mutuality and aggregation. Terms are pro•positional. They also can take the form of (artificially created) measures, expressing in•sights about concepts and other terms. Measures are often exposed in cells through pivot•ization – a popular technique that drills rows and columns to aggregated but volatile information.

Such ENTITY represents all taxonomy of a vocabulary.

Thus, glossar•ization is mainly a process of attributations on concepts & terms with meaning and instruction,

- where to localize (~Lineage),

- how to understand (~Literacy),

- how to enter (~Guide)

data.

Instructions are deliverables in form of policies, principles, manuals, procedures, guidelines, etc. The main objective of in•struct•ions is to

structure vocabularies and to show users the way out of the maze of vocabularies.

However, glossar•ization is also to picture the pre-positional cases and the pro-positional measures.

Comprehensively, the business data glossary is built on:

- Case

- Concepts

- Terms

- Data Lineage

- Data Literacy

- Data Entry Guide

The expectations to such data glossary are:

- Record of transparent and plain business language

- Reconciled, consistent labeling of concepts & terms

- Accurate maintenance of concepts & terms

- Sharing of consistent vocabulary among all business units

- Socialized verbalization of concepts & terms

- Well-trained network-affine

Data Stewards and empowered Data Office

- Concepts & terms are transferred to logically built data models.

- Concepts & terms are renamed in the logically built data models to entity-instance & entity-type / class.

- Concepts & terms build relations through attributes.

Know-how:

"Create a business glossary and extend to a catalogue if required."

ENTITY and its symbolism by Wittgenstein

ENTITY and its symbolism by Peirce

Entity and its taxonomy by practice:

- ENTITY name = Hiking equipment = {case, concepts, terms} = {hiking, adventure, tools}.

- Once the glossary has been enriched with lineage, literacy, and entry guide a glossary can be shaped to a catalogue with order-IDs.

- The whole conceptual schema of business glossary (vocabulary + structure) is sometimes also called semantic model.

There are 2 different meanings of the term entity:

- ENTITY as set of symbols: {case, concept, term}

- Entity as a unity of a table: {row, record, tuple, function, object, instance}

WHAT is DG

- the model•ization

Recall about Model, Schema and Table:

- A model is a mental / spiritual process / picture of reality. It is captured with a schema of an ENTITY, i.e. schema is a deliverable of a model•ization.

- The quality of the picture depends on 2 capabilities:

- Experiences that have been practiced before

- Representational (thus only logic) power of reality, where someone builds links between facts, so-called atomic facts / states of affairs. Representational powers are utilized to replicate the reality as much as possible.

- A model is like creating a painting by numbers i.e. digits:

- Measure the real object that you want to transfer to your canvas mentally

- Segment a canvas into mosaics and map the proportions of the mosaics to reality

- Shape the objects of each mosaic with a punctuation that replicates / maps reality and besides physicality includes contrast, depth, sharpness, greyscale, colorfulness, etc

- Digitize each mosaic dot through consecutive numbering

- Identify and accentuate the dots of the mosaics that need to be coupled for a full picture of reality.

- A table is a break down of entities in its attributes / properties. It is a subordinate of a schema. Tables contain vocabularies and inner relationships. Relationships to other outer columns, and tables are anchored through artificial relation keys. This is also where AI is domiciled.

In practice all logic business data ENTITY needs to be visualized by IT table vocabularies.

1. Basic Data Solution Designs:

Map the business glossary ENTITY's schema with the physical DBMS schema (Data Base Mgmt. System) without (logic) model between in order to conduct data integrations. Transfer the data from data glossary to data base with an ETL-Tool of your choice.

This approach would raise several problems along:

- Lots of mappings would be required with tremendous effort of maintenance in case of changes on source business glossary system and physical target DBMS.

- Data presentations would need ongoing customizations of the physical presentation layers.

- Radical changes or full replacements of target DBMS technology would require complete re-designs (and re-mapping) of integration architectures.

- DBMS downtimes would interrupt operations.

Such inefficient peer-to-peer-architectures call for logical intermediate layers, i.e. data solution designs with a logic that is . . .

- fluid and flexible,

- scalable,

- non-interruptible to complementary data solutions

I.e, while business continuously works with the ENTITY {case, concepts, terms}, lineage, literacy, and entry guide, computer science means to replicate / model / map the business logic ENTITY by data logic ENTITY. Such model•izations help to express business reality in logically phrased schemes of concepts & terms. Models act as intermediaries between business logic / glossaries and physically-computational DBMS technologies.

While the Conceptual Data Model CDM is often called semantical model, the physical DBMS model can also be called syntactical model, and the logic model can be called reconciliative model.

2. Business Domain Model B•DM:

Map the business glossary ENTITY's schema with a logical data solution schema and prolong the logic to the physical data solution schema in order to conduct data integrations. Logic data solution designs are the basis for physical data structures. Transfer finally the data from data glossary to data base with an ETL-Tool of your choice.

Four different logic solution design models could help to overcome the rigidity of physical mappings:

- Operational Data Store Data Model (ODS•DM)

- DataWareHouse Data Model (DWH•DM)

- Data Mart Data Model (DM•DM)

- Canonical Data Model (C•DM)

1-3 are physical DBMS technologies that are often adjusted to business data presentation layers, using B•DM as intermediaries between business glossary and DBMS. C•DM is the only Entity-Relationship Model ERM ontology that is independent from DBMS-technologies.

3. Canonical Data Model, C•DM:

C•DM is a variant of diverse solution design models, e.g. ODS•DM, DWH•DM, DM•DM, preparing data for physical DBMS integrations.

- C•DMs are standardized logical data models that serve the de•centralized scopes of business sectors.

- C•DMs constitute a releasing logic, facilitating physical DBMS integrations.

- C•DM is managed by business analysts, data scientists, and data architects and addressing the physical DBMS needs of IT specialists like developers, data engineers and DB administrators.

- While working on a C•DM, DBMS become not impacted.

4. Transversal Data Model, T•DM:

Self-evidently, the ambition of Executive Management is to control and steer the wheel of fortune transversally. Thus, mgmt. requests dashboards that show health and progress of business activities and outcomes at any time, place, and in any contextual detail.

T•DMs encompass all relevant sector data sources for strategic i.e. overarching decision planning. T•DM conserves interfaces between sectoral data sources in a simple form, that is dedicated to business users. Thus it does not include technical attributes like primary keys, foreign keys, attributes for history management, etc. Beyond, changes of sources are traced for approval due to potential impact on decision-making.

- T•DMs are standardized logical data models that serve as central space of business semantic.

- T•DMs constitute an absorbing entity-relationship logic, replicating the business glossary with reconciled, centralized, thus mastered business vocabulary.

- T•DM is managed by business analysts, data scientists, and data architects and categorizing the conceptual business vocabulary to standardized Entity Relationship Model ERM ontology. The conceptual business vocabulary entry is managed by business as experts, product owners und enterprise architects.

- T•DM governs enterprise vocabulary.

- While working on a T•DM, CDM, C•DM and physical DBMS become not impacted.

T•DM requires cultures that reference to mastered glossaries; cultures that actively check and use centralized vocabularies and vocabulary structures.

The establishment of such cultures is quite challenging, as traditionally built vertical teams within horizontal, but hierarchically collaborating organizations need to change to transversally acting data-sharing cultures. Such cultural change calls for new roles and responsibilities. These roles adjust their focus to data quality (see integrit•ization + utilitarian•ization) on integrational and operational level.

New ways of working might open (business-)cultural opportunities that have not been considered yet.

5. The Context of T•DM and C•DMs:

The shape, flexibility, and elasticity of data logic patterns are crucial for quick and efficient multi-, cross-, or omni-channel campaigns (like conference, roadshow, webcast, etc.), but also for longterm business sector data integrations, delivering KPI figures or verifying OKR targets.

The goal of the T•DM logic layer is to map business source (natural) language to standardized logic ERM ontology. The C•DM logic layer is adjusted to DBMS solution design requirements, thus adapting and enriching ERM ontology with DBMS attributes.

Data architects are owners of all logic layers. They are intermediaries between business and IT.

Data logic models (T•DM & C•DM) can indicate, detect, and reflect the need of new or changing (centralized) business vocabularies in glossaries.

T•DM acts as a foundational logic parent data model for all downstream IT-driven solution design models, especially for the C•DM. T•DM is master, supervisor, and regulator of C•DM vocabularies and structures / taxonomies. Thus T•DMs can detect inconsistencies of vocabularies and reduce likelihood of confusions about mastered vocabularies, when transferring to C•DMs.

T•DM owners are innovation drivers of new data vocabularies and improved data structures. They reflect their innovations to Conceptual Data Model CDM owners.

Both, C•DM and T•DM, can be triggered by ENTITY = {concepts, terms} and supported by relationships:

- Concept / row / entity / object / instance

- Term / column / attribute

- Relationships / predicates

The

logic ENTITY {concept, term, relation} shapes entire predications through logic expressions.

Know-how:

"Transfer a business glossary to a computer science data model"

WHAT is DG

- the MESH•ization

Large data digesting units can be meshed into nodes of data expertise (WHAT) and cords of collaboration (HOW) to ensure sufficient data processes.

The data mesh helps to establish a platform and product-centric organization that is transparent to all data practitioners. The grid segregates data citizens into functions and roles, and gives transparency about responsibilities. The grid is an offer to staff to log talent, interest, and will to certain data subjects becoming a data citizen. Everyone gets a chance to reveal actively his/her career preferences following the data mesh grid.

Beyond it helps strategists to identify and to forecast skills and expertise levels on data matters. Management can perform resource planning, if those skills are not sufficiently available.

In any case, data mesh helps to develop a data culture, either through campaigns, best matching training programs, or exchange in communities, etc. Data mesh is platform and link to an incremental enrichment of data competencies.

Know-how: "Mesh your data organization to boost data competencies"

WHAT is DG

- the DMAIC•ization

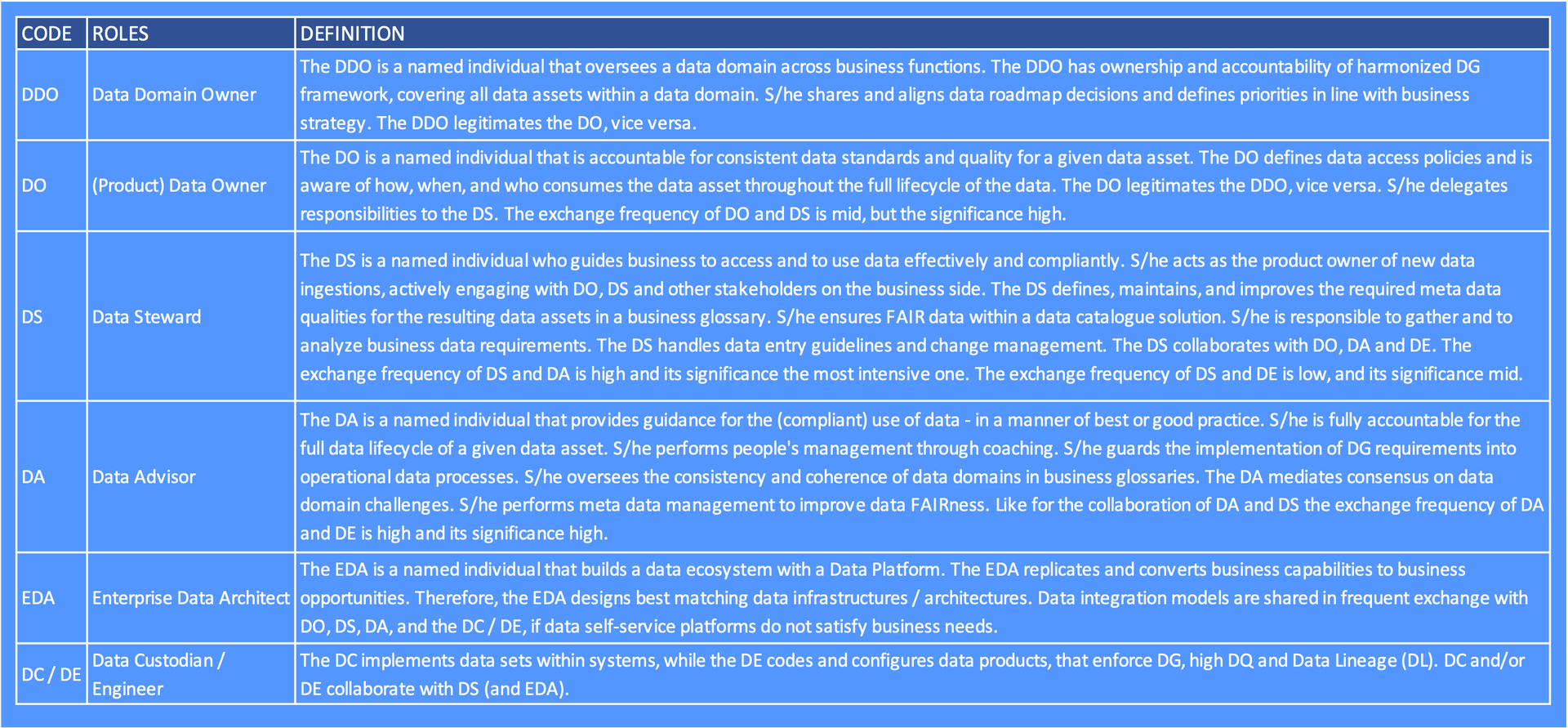

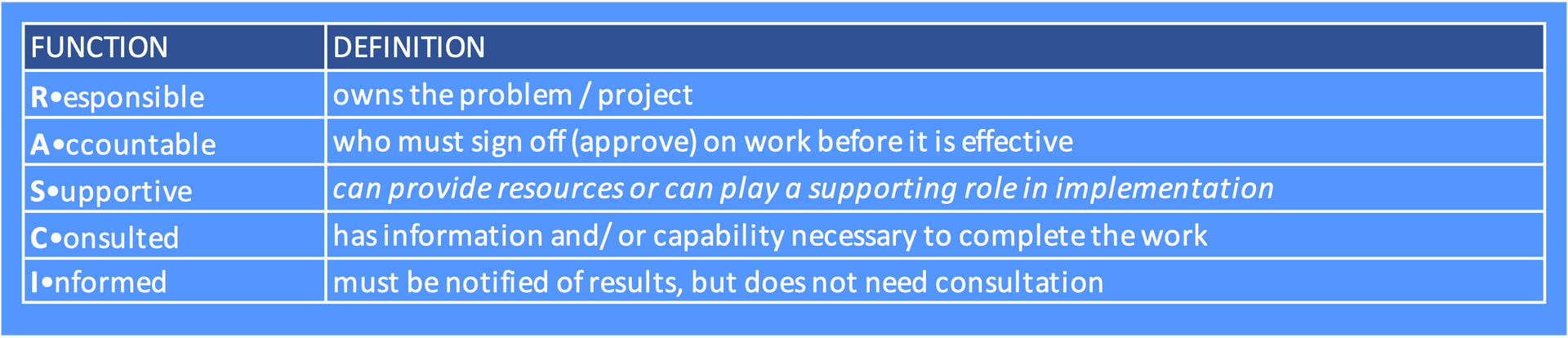

Data entries as well as required data transformations can have a negative impact on data health. Thus, governance of data is crucial to become compliant with regulatory claims. A best fitting organization of roles & responsibilities is basic to succeed.

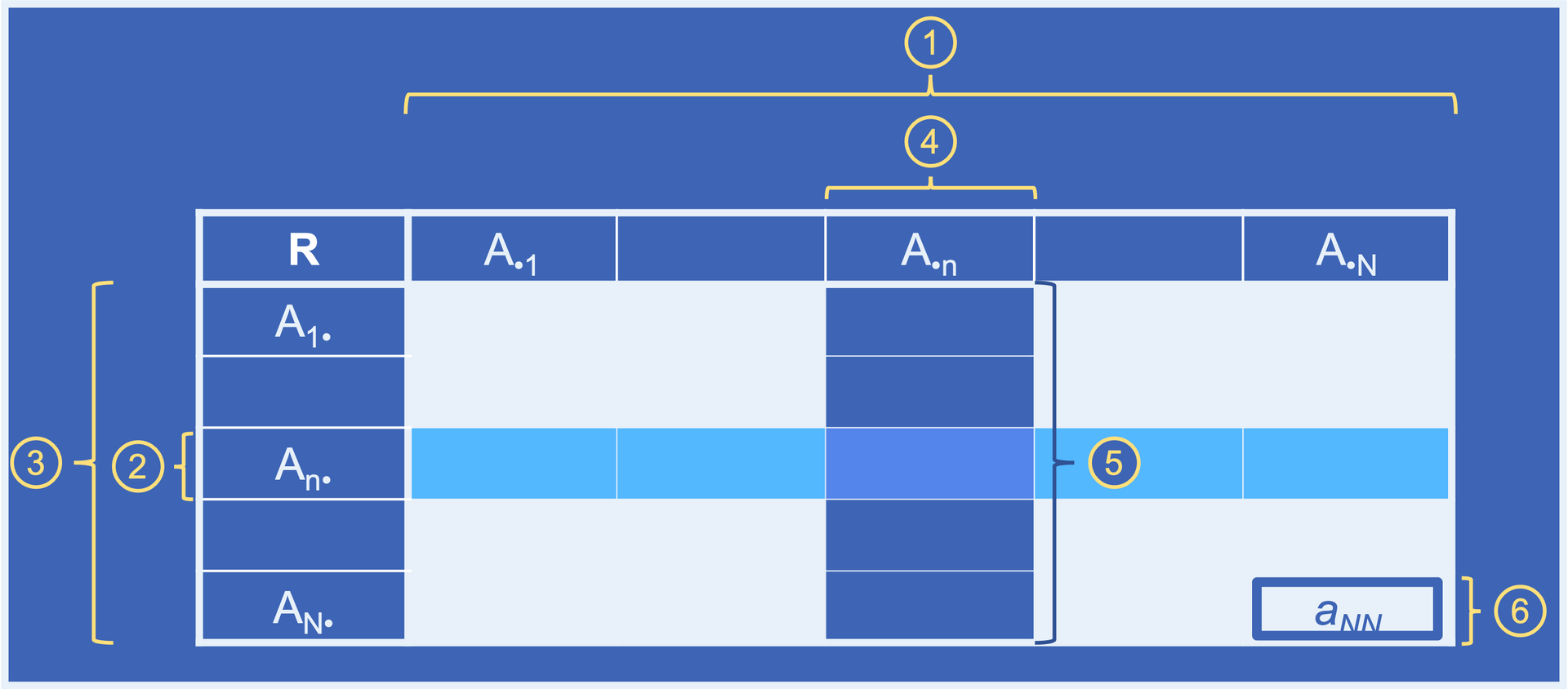

The DMAIC DQ lifecycle process is a down-stream of the data mesh organization and an option to build a process-centric organization, that wants to improve DQ during operations.

Such process-centric organizations are built on diverse roles that have acting levels, like RACI. Some of the roles are assigned to business units, some others to IT departments.

The continual improvement of DQ is supervised but also practiced by the DMAIC organization, and during the practice team members actively learn to develop an intrinsic data culture. Dedication becomes a matter of practice instead being indoctrinated by management!

Know-how: "Incorporate DG into DQ, means into the SixSigma DMAIC Lifecycle methodology to improve DQ incrementally"

WHAT is DG

- the FAIR•ification

By experiencethe automated way of DQ checks is often still not performant, when data labs and data scientists request rapid data integrations to respond on challenges or business opportunities with deep insights.

Corporate culture and data governance processes are quite rigid, or not adjusted to a good and equilibrial dose of data compliance, integrational speed and performant access.

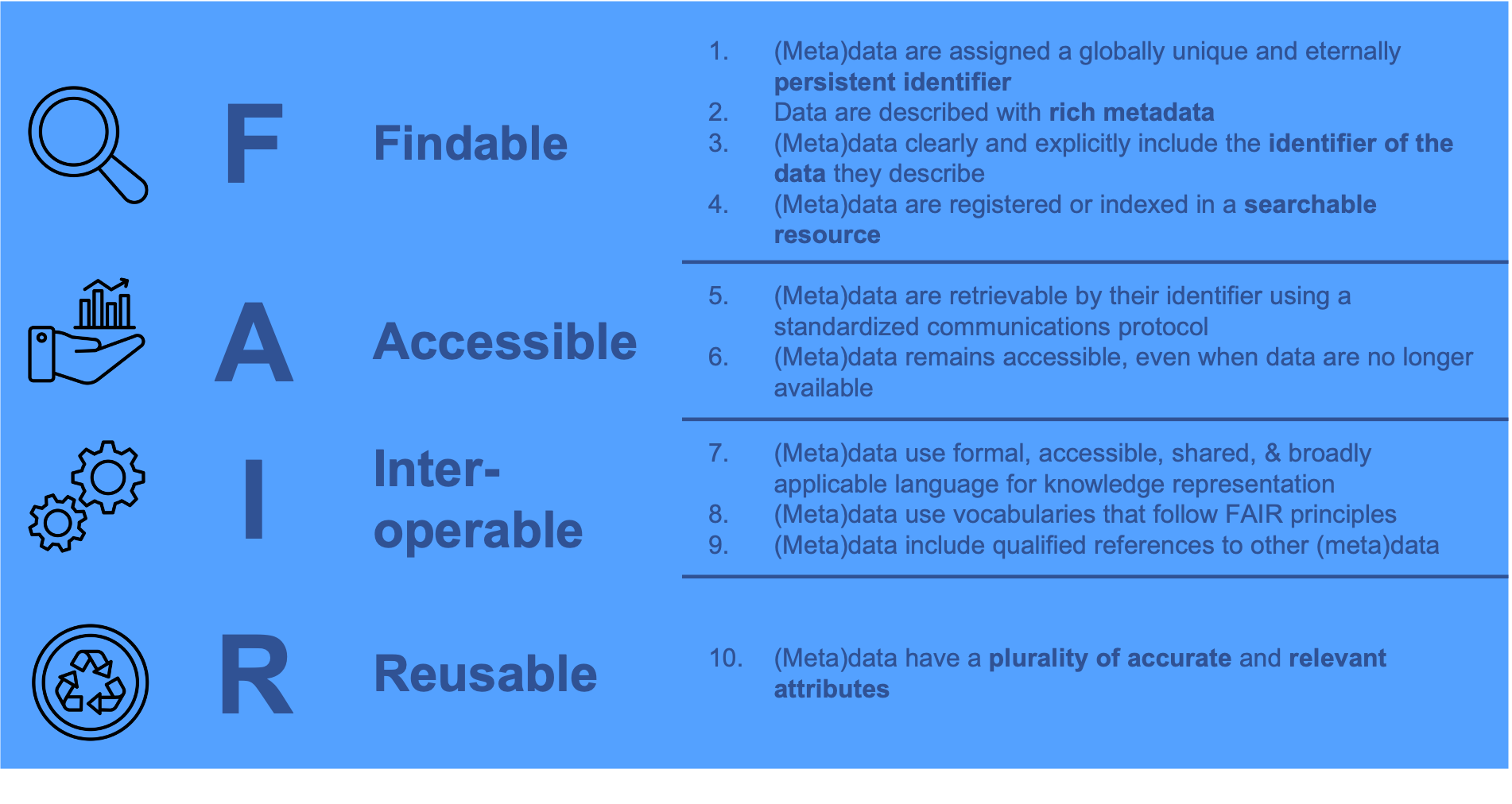

I.e. data is often not FAIR enough to be reapplied (FAIR stands for Findable, Accessible, Interoperable, Reusable).

Know-how: "Follow 10 principles to succeed in the FAIRification of data and meta data"

WHAT is DQ

- the key messages

A data model is either an overarching Transversal Data Model TDM or an expert, more sectoral, so-called Canonical Data Model CDM. Both data models can be triggered by ENTITY = {concepts, terms} and supported by relationships:

- Concept / row / entity / object / instance

- Term / column / attribute

- Relationships / predicates

The logic ENTITY {concept, term, relation} shapes entire predications through logic expressions.

However only the complementary combination of TDM and CDM delivers a reliable architecture, that covers all requirements and expectations of managers and experts.

The selected solution design needs to be compiled / customized with physically materialized data structures, adopted by ODS, Middlewares, DWH, Lakes, applications, etc. Finally, business data from glossary can be pumped through ETL pipelines into materialized view of target-DBMS for further consumptions by business units.

The model•ization process is similar to the glossar•ization process.

Both - glossarization and modelization - are supported by robust organizations (MESH and DMAIC) and FAIR data principles.